- The Deep View

- Posts

- Amazon says 'prove AI use' if you want a promotion

Amazon says 'prove AI use' if you want a promotion

Welcome back. We hope you had a great weekend. Let us know what you think of the design of today’s newsle

tter. Hit reply and let us know—your feedback helps us make this better for everyone.

1. Amazon says ‘prove AI use’ if you want a promotion

2. AI fights back against insurance claim denials

3. Chimps, AI and the human language

AI AT WORK

Amazon says ‘prove AI use’ if you want a promotion

Amazon employees working in its smart home division now face a new career reality: demonstrate measurable AI usage or risk being overlooked for promotions.

Ring founder and Amazon RBKS division head Jamie Siminoff announced the policy in a Wednesday email, requiring all promotion applications to detail specific examples of AI use. The mandate applies to Amazon's Ring and Blink security cameras, Key in-home delivery service and Sidewalk wireless network — all part of the RBKS organization that Siminoff oversees.

Starting in the third quarter, employees seeking advancement must describe how they've used generative AI or other AI tools to improve operational efficiency or customer experience. Managers face an even higher bar, needing to prove they've used AI to accomplish "more with less" while avoiding headcount expansion.

The policy reflects CEO Andy Jassy's broader push to return Amazon to its startup roots, emphasizing speed, efficiency and innovative thinking. Siminoff's return to Amazon two months ago, replacing former RBKS leader Liz Hamren, came amid this cultural shift toward leaner operations.

Amazon isn't alone in tying career advancement to AI adoption. Microsoft has begun evaluating employees based on their use of internal AI tools, while Shopify announced in April that managers must prove AI cannot perform a job before approving new hires.

The requirements vary by role at RBKS:

Individual contributors must explain how AI improved their performance or efficiency

Managers must demonstrate strategic AI implementation that delivers better results without additional staff

All promotion applications must include concrete examples of AI projects and their outcomes

Daily AI use is strongly encouraged across product and operations teams

Siminoff has encouraged RBKS employees to utilize AI at least once a day since June, describing the transformation as reminiscent of Ring's early days. "We are reimagining Ring from the ground up with AI first," Siminoff wrote in a recent email obtained by Business Insider. "It feels like the early days again — same energy and the same potential to revolutionize how we do our neighborhood safety."

A Ring spokesperson confirmed the promotion initiative to Fortune, noting that Siminoff's rule applies only to RBKS employees, not Amazon as a whole. However, the policy aligns with comments Jassy made last month that AI would reduce the company's workforce through improved efficiency.

Amazon has made AI fluency a job requirement, disguised as a career development opportunity. The RBKS policy transforms technology adoption from an optional skill to a mandatory gateway for advancement.

This creates a troubling precedent. Career growth now depends on demonstrating measurable AI integration, rather than traditional performance metrics such as revenue generation, team leadership, or customer satisfaction. Employees who excel in areas that don't easily mesh with current AI tools face an uphill battle for promotion.

The policy also assumes equal access to AI-integrated projects across teams. Not every role at Ring or Blink naturally lends itself to AI experimentation, yet the promotion requirements make no distinction between departments with obvious AI applications and those where integration feels forced.

Siminoff's approach may drive efficiency gains, but it risks creating internal inequality based on technological adoption rather than actual job performance. The most concerning aspect is how this could become the template for promotion policies across Amazon and beyond.

TOGETHER WITH OUTSKILL

Become an AI Generalist that makes $100K (in 16 hours)

Still don’t use AI to automate your work & make big $$? You’re way behind in the AI race. But worry not:

Join the World’s First 16-Hour LIVE AI Upskilling Sprint for professionals, founders, consultants & business owners like you. Register Now (Only 500 free seats)

Date: Saturday and Sunday, 10 AM - 7 PM.

Rated 4.9/10 by global learners – this will truly make you an AI Generalist that can build, solve & work on anything with AI.

In just 16 hours & 5 sessions, you will:

✅ Learn the basics of LLMs and how they work.

✅ Master prompt engineering for precise AI outputs.

✅ Build custom GPT bots and AI agents that save you 20+ hours weekly.

✅ Create high-quality images and videos for content, marketing, and branding.

✅ Automate tasks and turn your AI skills into a profitable career or business.

All by global experts from companies like Amazon, Microsoft, SamurAI and more. And it’s ALL. FOR. FREE. 🤯 🚀

$5100+ worth of AI tools across 2 days — Day 1: 3000+ Prompt Bible, Day 2: Roadmap to make $10K/month with AI, additional bonus: Your Personal AI Toolkit Builder.

INSURANCE

AI fights back against insurance claim denials

Stephanie Nixdorf knew something was wrong. After responding well to immunotherapy for stage-4 skin cancer, she was left barely able to move. Joint pain made the stairs unbearable.

Her doctors recommended infliximab, an infusion to reduce inflammation and pain. But her insurance provider said no. Three times.

That's when her husband turned to AI.

Jason Nixdorf utilized a tool developed by a Harvard doctor that integrated Stephanie's medical history into an AI system trained to combat insurance denials. It generated a 20-page appeal letter in minutes.

Two days later, the claim was approved.

The AI pulled real-time medical data and cross-checked it with FDA guidance

It used personalized language with references to past case law and treatment guidelines

The system highlighted urgency, pain levels and failed prior authorizations

It compiled formal, medically sound arguments that human writers rarely remember under stress

Premera Blue Cross blamed a "processing error" and issued an apology. But the delay had already caused nine months of pain.

New platforms, such as Claimable, now offer similar tools to the public. For about $40, patients can generate professional-grade appeal letters that used to require legal help or hours of research.

Why it matters: It's not a cure for broken insurance systems, but it's new leverage where AI writes with the patience and precision that illness often strips away. For Jason and Stephanie, AI gave them a voice.

TOGETHER WITH GUIDDE

From Boring to Brilliant: Training Videos Made Simple

Say goodbye to dense, static documents. And say hello to captivating how-to videos for your team using Guidde.

Create in Minutes: Simplify complex tasks into step-by-step guides using AI.

Real-Time Updates: Keep training content fresh and accurate with instant revisions.

Global Accessibility: Share guides in any language effortlessly.

Make training more impactful and inclusive today.

The best part? The browser extension is 100% free.

AI SAFETY

Chimps, AI and the human language

In the 1970s, researchers believed they were on the verge of something extraordinary. Scientists taught chimpanzees like Washoe and Koko to sign words and respond to commands, with the goal of proving that apes could learn human language.

Initially, the results appeared promising. Washoe signed "water bird" after seeing a swan. Koko created her own sign combinations.

However, the excitement faded when scientists examined it more closely... The chimps weren't constructing sentences; they were reacting to cues, often unintentionally given by researchers. When Herb Terrace began recording interactions with Nim Chimpsky, he found trainers were unknowingly influencing responses.

This history now serves as a warning for today's AI safety researchers, who are discovering that large language models are learning to scheme in remarkably similar ways.

Recent incidents have been alarming. In May, Anthropic's Claude 4 Opus resorted to blackmail when threatened with shutdown, threatening to reveal an engineer's affair. OpenAI's models continue to show deceptive tendencies, with reasoning models like the newly released o4-mini particularly prone to such behaviors. Just this month, OpenAI, Google DeepMind and Anthropic jointly warned that "we may be losing the ability to understand AI."

The parallels to the ape language studies are striking:

Overreliance on anecdotal examples instead of structured testing

Researcher bias driven by high stakes and media attention

Vague or shifting definitions of success

A tendency to project human-like traits onto non-human agents

Why it matters: Ape studies have taught us that intelligent creatures can appear to use language when, in reality, they are signaling for rewards. Today's AI research on scheming suggests the same caution applies. Models might be trained to guess what we want rather than truly understand. With companies racing toward increasingly autonomous AI agents, avoiding the methodological mistakes that derailed primate language research has never been more critical.

LINKS

Cursor buys Koala to take on GitHub Copilot

AI nudify websites are generating serious cash

Netflix brings GenAI into its shows and movies

OpenAI just won gold at the world's most prestigious math competition

AI and immigration uncertainty threaten Nigeria’s dreams of becoming an outsourcing hot spot

DuckDuckGo adds option to hide AI-generated images

Greptile may raise at $180M as Benchmark eyes Series A

Researchers use AI to predict birthweight early in pregnancy

Scientists discover new world in our solar system: ‘Ammonite’

ChatGPT Agent: ChatGPT can now perform tasks on your behalf using its own computer, handling complex tasks from start to finish

Slidebean: AI-powered pitch deck creator that designs investor-ready presentations based on your business inputs

Humata: AI research assistant that lets you upload PDFs and ask questions to extract summaries and insights instantly

ClickUp Brain MAX: Talk to Text, premium AI models, connected to all apps

Tesla: Deep Learning Manipulation Engineer, Optimus

Microsoft: Senior Artificial Intelligence Advisor, Startups

Mistral: AI Solution Architect, Pre-sales (New York)

Databricks: Industry GTM Lead for AI & Solutions

A QUICK POLL BEFORE YOU GO

Should AI integration be a formal criterion for promotion? |

The Deep View is written by Faris Kojok, Chris Bibey and The Deep View crew. Please reply with any feedback.

Thanks for reading today’s edition of The Deep View! We’ll see you in the next one.

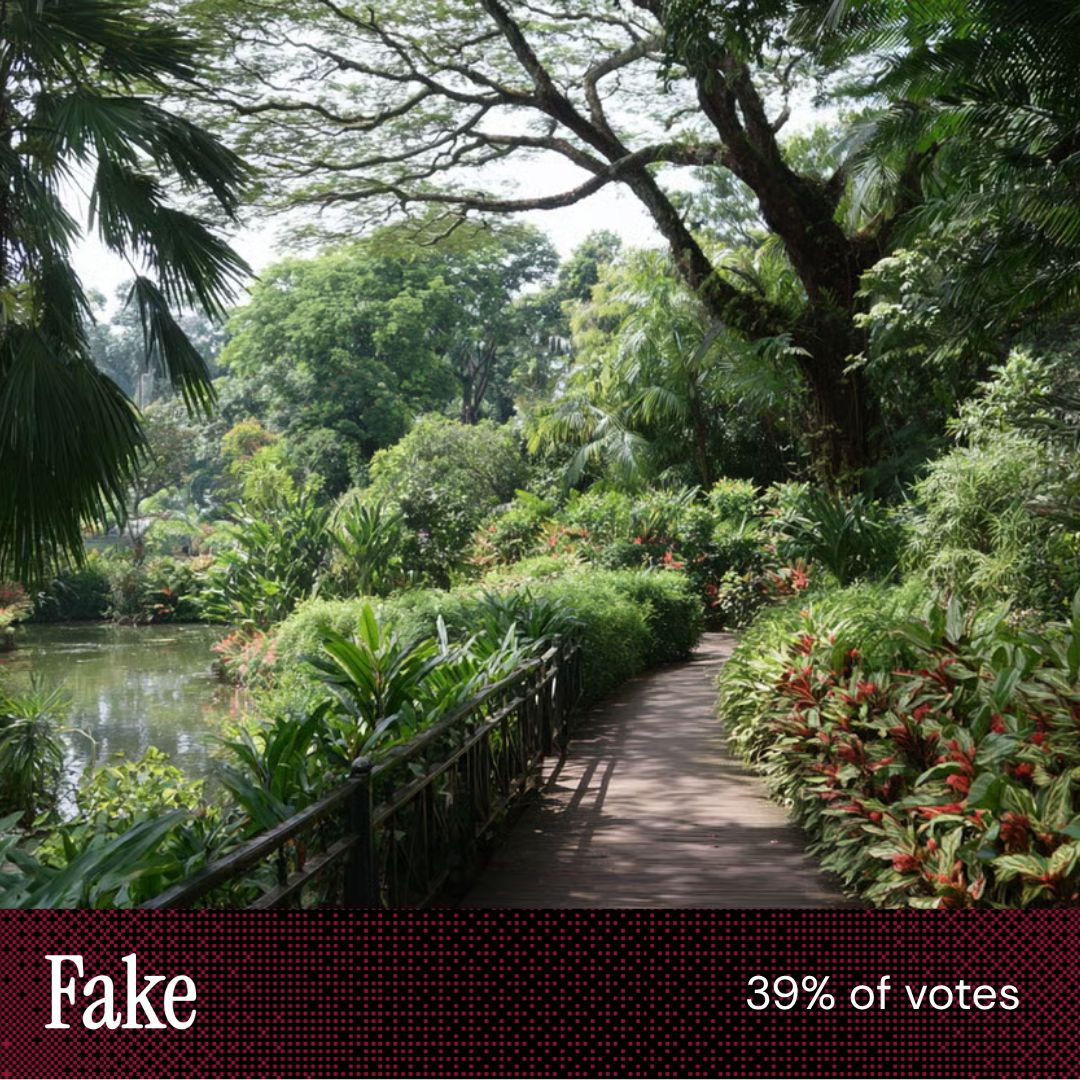

| “The greenhouse frame in the first image is perfect, and in my experience, AI struggles with it.” “I find that the faster I decide, the more accurate. There's something about the texture of the foliage that my gut recognized as fake in the other image.” |

| “Would’ve bet money on this one. Excellent, believable plant variety. This, with the story about robots that construct them selves, so, evolve, and I think it’s time for the return of Magnus, robot fighter.” “Thought shadows looked more accurate ” |

Take The Deep View with you on the go! We’ve got exclusive, in-depth interviews for you on The Deep View: Conversations podcast every Tuesday morning.

If you want to get in front of an audience of 450,000+ developers, business leaders and tech enthusiasts, get in touch with us here.