- The Deep View

- Posts

- ⚙️ 60% of managers use AI to decide promotions and firings

⚙️ 60% of managers use AI to decide promotions and firings

Welcome back. Scale AI has laid off 200 employees (14% of its staff) and severed ties with 500 contractors, with interim CEO Jason Droege admitting that they had "too quickly scaled" their core data-labeling business. This comes just a month after Meta's $14.3 billion investment and the poaching of its CEO, which prompted several major customers to sever ties with the company immediately. Remember when Zuckerberg said he was buying Scale AI for their expertise? It turns out that expertise in rapid downsizing wasn't what he had in mind.

In today’s newsletter:

🧑🤝🧑 Digital twin that predicts diseases before symptoms emerge

🤝 Meta and AWS join forces to woo developers

🏢 AI is quietly reshaping workplace power

🧑🤝🧑 Digital twin that predicts diseases before symptoms emerge

Source: Midjourney v7

What if your doctor could predict diabetes two years before any symptoms appear? Not through crystal ball gazing, but by running simulations on your personal AI twin.

An international team of researchers, including scientists from the Weizmann Institute of Science and MBZUAI, has created exactly that: a "digital twin" that simulates your body's health future and flags disease risks before they manifest. The breakthrough, published in Nature Medicine, represents a fundamental shift from reactive to predictive healthcare.

The AI system is powered by the Human Phenotype Project, one of the most comprehensive health studies ever conducted. Over 30,000 participants undergo extensive medical assessments every two years for 25 years, tracking everything from sleep patterns to microbiome samples to continuous glucose monitoring.

Built on Pheno.AI's platform, the model learns how 17 body systems typically age and assigns each a "biological age" score. When patterns deviate from normal aging trajectories, the system flags potential disease risks.

The digital twin's capabilities:

Detected prediabetic risk in 40% of people considered healthy by standard tests

Predicted glucose levels and diabetes onset two years in advance

Analyzed 10 million glucose readings through a specialized "Gluformer" model

Simulated treatment effects before implementation

Tailored insights by sex, ethnicity, lifestyle and chronological age

Professor Eran Segal's team has expanded globally with satellite branches in Japan and the UAE, aiming for 100,000 participants. They're developing a consumer app that will put this predictive power directly in users' hands through a personal health dashboard.

The ultimate goal isn't just prediction, it's prevention. Your digital twin doesn't just forecast your health future; it helps you rewrite it.

From Code to Kitchens

The future of AI will be decided in the field, not a lab. That’s why investors pay more attention to what comes after the prototype – and why 39K+ investors like you are already backing Miso Robotics.

Miso honed its AI-powered kitchen robot Flippy Fry Station for 200K+ hours in live kitchens with the help of leaders like NVIDIA and Amazon.

Now, it’s launched its first fully commercial version. Already used by brands like White Castle to boost profits up to 3X, initial units sold out in one week.

Flippy is officially targeting 100K+ needy US fast-food locations. There’s even a new major partner announcement coming to help them get there.

🤝 Meta and AWS join forces to woo developers

Source: ChatGPT 4o

Meta is spending millions to ensure developers choose its Llama models over OpenAI's offerings, and Amazon is footing part of the bill.

The two tech giants launched a joint program offering $200,000 in AWS credits plus six months of engineering support to 30 early-stage startups building with Llama. The initiative represents a direct challenge to OpenAI's developer ecosystem, which has dominated AI application development since the launch of ChatGPT.

Meta is investing heavily in open-source AI leadership. While OpenAI charges for API access and maintains tight control over GPT models, Meta gives away Llama for free. Llama models have been downloaded over 1 billion times since launching in 2023.

But that open-source commitment may be wavering. Meta's newly formed Superintelligence Lab is discussing abandoning the company's most powerful open-source model, called Behemoth, in favor of developing a closed model. The discussions, involving 28-year-old Chief AI Officer Alexandr Wang and other top lab members, would mark a major philosophical shift for Meta.

AWS benefits by keeping startups on its infrastructure. As cloud costs soar with AI workloads, the partnership helps Amazon remain central to model hosting and compute services regardless of which company ultimately wins.

The program offers:

$6 million total in AWS infrastructure credits

Direct technical support from Meta and AWS engineering teams

Pre-built integrations to reduce development time

Access to Llama's latest models and updates

The collaboration comes as Meta at LlamaCon focused entirely on undercutting OpenAI, releasing consumer-facing chatbot apps and developer APIs designed to expand Llama adoption. Meta's approach contrasts sharply with proprietary AI companies — recent analysis shows Meta trailing China's DeepSeek despite massive infrastructure investments.

The partnership signals a shift in the AI race from model capabilities to developer adoption and ecosystem control, even as Meta's commitment to open-source AI faces its biggest test yet.

Want To Stay On Top Of The Latest SMB Trends?

Look no further. Salesforce, the premier CRM platform, doesn't just offer powerful AI CRM solutions; they also keep you on the cutting edge of the small business world.

Their newest report keeps you informed with the latest SMB insights.

Inside you’ll find insights from over 3,000 small and medium business leaders, including how they’re building their tech stacks, how they’re leveraging AI to boost revenue and efficiency, and their biggest priorities (and challenges) for the upcoming year.

Delta wants to bring AI copilots to the sky

AI is now teaming up with traditional medicine

Fed up with ChatGPT, Latin America is building its own

Google supercharges Search for AI power users

Another top OpenAI researcher jumps ship to Meta

xAI is now hiring engineers to build anime girlfriends

Trump unveils $92B+ bet on AI and energy dominance

AI rewrites the rules for how the energy game is played

Anthropic hires back two of its employees just two weeks after they left for Cursor

Former top Google researchers have made a new kind of AI agent

AI creeps into the risk register for America's S&P 500

General Motors: Sr. AI Developer & Advanced Methods

AMD: Lead Data Scientist

Hume: A voice-based LLM that detects human emotion in conversations to improve AI interactions

Recast Studio: An AI-powered video editor that helps marketing teams edit and repurpose their video and audio content

Feathery: Build custom AI-powered forms that integrate with backend logic, workflows and design systems

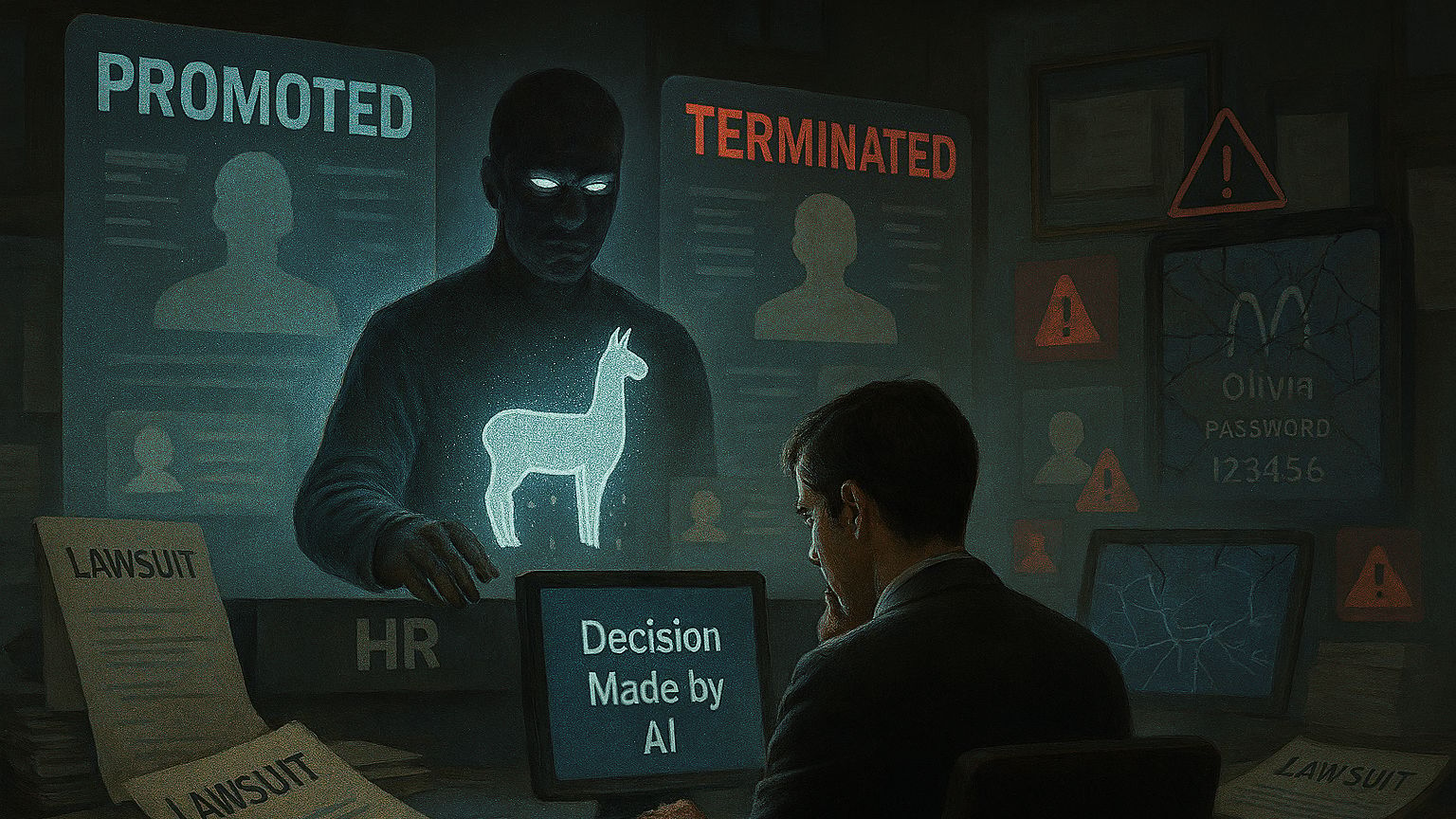

🏢 AI is quietly reshaping workplace power

Source: ChatGPT 4o

Your next promotion might be decided by an algorithm. So might your firing.

Sixty percent of managers now use AI to make consequential workplace decisions, including who gets promoted and terminated. This marks a fundamental shift in how corporate power operates, with artificial intelligence becoming the invisible hand guiding career trajectories across the United States.

The transformation is accelerating rapidly. Microsoft has made AI tools non-optional for employees, while 88% of C-suite executives globally say speeding up AI adoption is critical this year.

But AI hiring is becoming a litigation nightmare. In May, a federal judge allowed a nationwide class action against Workday to proceed, with plaintiffs claiming that the company's AI screening tools discriminate against applicants over 40. Derek Mobley alleged he was rejected from over 100 jobs in seven years due to algorithmic bias based on age, race and disabilities.

Meanwhile, McDonald's AI hiring system suffered an embarrassing security breach. Researchers discovered that the company's "Olivia" chatbot, used by 90% of McDonald's franchisees, exposed the personal data of 64 million job applicants because admin access was protected by the password "123456."

The scope of AI bias is staggering. University of Washington research found that in AI resume screenings:

Algorithms favored white-associated names in 85% of cases

Female names were favored in only 11% of cases

Black male applicants were disadvantaged 100% of the time compared to white males in some scenarios

Yet the adoption continues largely in secret. Research shows employees are secretive about AI use at work, creating a culture of concealment around tools reshaping fundamental workplace dynamics.

This secrecy extends to management, where AI decision-making often occurs without transparency about how algorithms influence careers. Robert Half found that 54% of hiring managers seek AI-linked skills, while AI platforms help identify early signs of employee dissatisfaction.

HR professionals report widespread AI adoption but cite major concerns:

Algorithmic bias in hiring and promotion decisions

Data privacy violations and security breaches

Potential to amplify existing workplace discrimination. Legal experts warn that over 30 states have formed AI committees, issuing recommendations that are likely to become legislation.

PwC predicts that companies will be forced to address AI governance systematically, with stakeholders demanding transparency similar to that in financial reporting. The consulting firm warns that "rigorous assessment of AI risk management practices will become nonnegotiable."

We're witnessing algorithmic management on a scale that makes surveillance capitalism look quaint. When 60% of managers use AI for promotion and termination decisions, often without employee knowledge, we're not automating processes; we're automating power itself.

The McDonald's password debacle and the Workday discrimination lawsuit highlight the lack of preparedness among companies for the responsible deployment of AI. These are previews of systemic failures that occur when human oversight is absent.

The secrecy surrounding the use of managerial AI suggests that organizations are aware that this raises uncomfortable questions about fairness and accountability. But hiding the revolution doesn't make it less real — it just makes it less accountable.

Which image is real? |

🤔 Your thought process:

Selected Image 1 (Left):

“The text on posters was the first giveaway. The fake had odd, unrealistic looking text. The real one, legible text that mostly made sense... the geometrics of things... crossbars seemed to just disappear...”

“The "fake" image seemed pristine... never seen armrests like that... The "real" image had poorly managed white balance—a mistake that lots of amateur photographers make, which AI could easily copy, but I didn't think that a model designer would encourage the model to make the same mistake.”

Selected Image 2 (Right):

“No adverts or maps”

“The real image has a distorted-looking US map on it, which misled me.”

💭 Poll results

Here’s your view on “How do you feel after learning that long AI chats multiply carbon emissions exponentially?”:

Had no idea — that’s alarming (25%):

“How would people and governments react if a new fleet of trucks took to the roads that were driverless and consumed 10 times as much fuel as the ones we have now?”

Makes sense but I still use it freely (13%):

“*Also* this is a tech problem the hyper-scalers will solve. The environmental impact is based on power consumption, not method of acquiring energy (like debating coal vs wind power). Which means scaling back energy expenditure makes it cheaper for companies to operate and reduce environmental impact. When most of the arguments about the dangers of AI on the environment are slippery slope or projections that don't assume efficiency gains, I'm not going to pay it much mind. If the environment was a bigger topic back in the dot-com bubble, we would see tons of articles about how bad robo-crawlers are for the environment, but now they aren't even a blip on the radar.”

I want to change how I use AI now (13%):

“I had no idea. It’ll cause me to adjust my usage. Tech definitely needs to figure out more efficient methods. Unfortunately when tech is in this crazy growth phase, that’s often put on the back burner. ”

Tech needs to solve this, not users (41%):

“Expecting users to understand all the nuances of AI emissions is unrealistic. It’s squarely on the developers to figure out the best performance to environmental impact ratio.”

Other (8%):

“AI could help solve the problem. Nuclear energy was to go.”

The Deep View is written by Faris Kojok, Chris Bibey and The Deep View crew. Please reply with any feedback.

Thanks for reading today’s edition of The Deep View! We’ll see you in the next one.

P.S. Enjoyed reading? Take The Deep View with you on the go! We’ve got exclusive, in-depth interviews for you on The Deep View: Conversations podcast every Tuesday morning. Subscribe here!

P.P.S. If you want to get in front of an audience of 450,000+ developers, business leaders and tech enthusiasts, get in touch with us here.

*Indicates sponsored content

*Miso Disclaimer: This is a paid advertisement for Miso Robotics’ Regulation A offering. Please read the offering circular at invest.misorobotics.com.